Free Funnel Audit

Convert more customers today!

SEO

10 mins read

SEO

10 mins read

If you have ever changed a title tag, rewritten a page, or added more content and then waited for rankings to improve, you have already felt the need for SEO testing, even if you did not call it that. Most teams make SEO changes with good intentions, but very few stop to ask the uncomfortable follow-up question: Did that change actually cause the result we’re seeing?

That uncertainty is where frustration starts. Traffic goes up, but you’re not sure why. Rankings drop, and you don’t know which change triggered it. Budgets get spent, time gets invested, and decisions get justified after the fact. Over time, SEO becomes less about strategy and more about educated guesses.

That is exactly why SEO testing matters.

This guide is written for those who are responsible for growth, leads, or revenue and need a way to separate coincidence from causation. This guide answers everything you need to know about what is working, what is neutral, and what is quietly holding your site back.

And once you understand how testing SEO really works, it becomes very hard to go back to guessing.

SEO testing is the process of making deliberate changes to your website and measuring how those changes affect organic traffic, visibility, and conversions.

In simple terms, it answers one big question every serious marketer eventually asks: “Is this SEO change actually helping, or do I just think it is?”

If you are running a real business, not a hobby site, this question matters more than most people admit.

We have seen teams rewrite pages, change titles, add content, remove content, tweak layouts, and celebrate wins that were never caused by their changes at all. And we have also seen quiet, boring tests unlock growth that paid ads could not buy. SEO testing sits right in the middle of that difference.

When people first hear about SEO testing, they often imagine something neat and controlled, like A/B testing ads where you change one thing, compare two results, and pick a winner.

But SEO is messier than that.

SEO testing means you change something on your site and then observe how search performance responds over time.

You are not testing in a lab. You are testing on the open internet, where Google updates algorithms, competitors change pages, and user behavior shifts daily.

That is why SEO testing is not about perfection. It is about direction.

While SEO testing, you should ask questions like:

Once you have the answers, perform the test, measure the performance, and learn from the results. Then you decide what to roll out and what to kill.

At its core, testing SEO is all about replacing guesswork with evidence.

Earlier, SEO used to be slower and simpler. You just had to follow a checklist and wait.

But that world and time are gone.

Search results are changing faster. AI summaries are appearing. Competitions are being published at scale. And budgets are getting tighter. Every change you make now has an opportunity cost.

SEO testing matters because it protects you from three very expensive mistakes.

Think of it like tuning an engine. You do not replace parts at random. You adjust, test, and listen.

Without testing, SEO becomes belief-driven, but with testing, it becomes decision-driven.

This is the doubt almost everyone has, and it is a fair one. The honest answer is, SEO testing is possible, but it is never perfectly controlled.

Unlike ads or email, you cannot split traffic evenly or freeze external variables. No two pages are truly identical. And you cannot stop Google from changing the rules mid-test.

But here is the key insight most people miss. SEO testing is not about proving absolute truth. It is about increasing confidence.

If you run a thoughtful test and see a consistent lift across similar pages, over enough time, you can make better decisions than if you made no test at all.

Before you test anything, you need to know if your site is ready. SEO testing only works when there is enough stability to tell the cause from coincidence.

If your site is constantly changing or still struggling with basics, tests can create more confusion than clarity. Knowing when SEO testing makes sense, and when it does not, saves you from chasing misleading results and helps you focus your effort where it will actually pay off.

SEO testing makes sense when:

SEO testing does not make sense yet when:

In those cases, fix the basics first. Testing on a shaky foundation leads to misleading results.

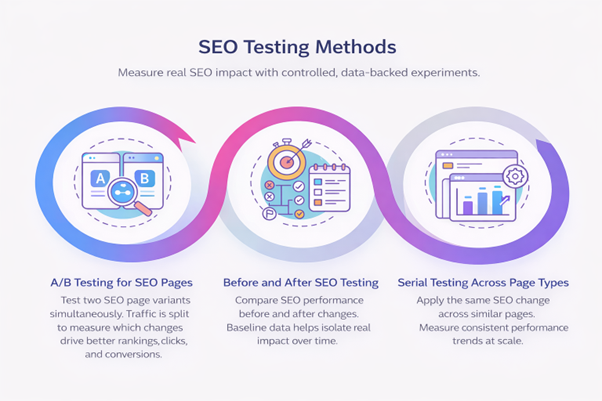

SEO testing is not a one-size-fits-all exercise. The way you test depends on how your site is built, how much traffic you get, and what kind of change you are trying to evaluate. Some methods work well for small, focused updates, while others are better suited for broader structural changes.

The real skill lies in picking an approach that gives you useful direction without introducing unnecessary noise or false confidence.

A/B testing is the closest thing to classic testing.

You take a group of similar pages. Some pages remain unchanged, and others get a specific change. Over time, you compare performance.

This works best for:

The pages need to be similar in intent, structure, and traffic. Otherwise, you are comparing apples to oranges.

This is the most common and the most misused method.

In this test, you record performance, make a change, and compare the results before and after. It is simple and accessible but risky too.

Without enough time, context, and control, changes in traffic or rankings can be caused by seasonality, algorithm updates, or competitor activity rather than the change you made.

Still, this method is useful when:

This approach applies one change across a full set of similar pages. So, instead of isolating one URL, you apply the same change across a group of similar pages and observe how that group behaves over time.

For example, you update all blog title tags to include clearer intent signals. Then you watch how the group performs over time.

This method accepts that individual pages will react differently and focuses on overall direction instead of isolated wins.

This approach is especially useful when:

When you run an SEO test, the numbers you choose to watch can shape the conclusions you draw. It is easy to get distracted by rankings or short-term traffic spikes, but those signals rarely tell the full story.

If you want testing to guide real decisions, you need to focus on metrics that reflect visibility, engagement, and actual business impact, not just movement on a chart.

Key metrics to track include:

Rankings alone are not enough. Traffic without engagement is noise. Testing works when metrics align with intent.

This is where most teams go wrong. They jump to changes without clarity. Most SEO teams do not fail because their ideas are bad. They fail because their process is messy.

Someone changes a page, another person updates internal links, a third tweaks headings, and then everyone tries to explain the results after the fact. That is not testing, it is guessing with extra steps. A good SEO test has a steady rhythm that keeps you focused, and makes the outcome easier to trust.

Step 1: You form a hypothesis. You state what you believe will happen and why. For example, increasing content depth may improve rankings for long-tail queries.

Step 2: You choose the right test type. Not every idea deserves the same method. Match the method to the risk.

Step 3: You pick suitable pages. Pages need traffic, stability, and relevance. High traffic pages produce faster learning.

Step 4: You define the variable and change one meaningful thing, not five small things.

Step 5: You create the variation. Make it different enough to matter, but not so extreme that it breaks experience.

Step 6: You ensure tracking is clean. This is where advanced analytics matter more than most people realize.

Step 7: You run the test long enough. Days are rarely enough. Weeks or months are often required.

Step 8: You analyze results calmly. Look for consistency, not spikes. Ask what changed and why.

Step 9: You implement winners carefully. Roll out changes with context. Keep user experience intact.

Step 10: You keep watching. SEO testing never truly ends.

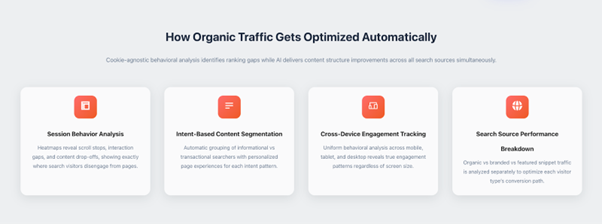

This is also where platforms like CausalFunnel’s DeepID attribution become valuable. Instead of only seeing surface metrics, Deep ID connects organic visits to deeper user behavior and conversions across sessions. That makes test results more reliable, especially when SEO changes influence longer decision cycles.

Many SEO tests fail because teams measure the wrong outcome.

SEO rarely converts on the first visit. People come, leave, return, compare, and then act. If your testing only looks at last-click data, you miss half the story.

This is where CausalFunnel’s funnel analytics becomes especially useful. It tracks how organic traffic contributes across the entire journey, not just the final click. When you test SEO changes, this kind of visibility helps you understand whether improvements actually influence revenue, not just vanity metrics.

Without proper attribution, you risk optimizing for numbers that feel good but do not pay bills.

Most SEO tests fail for reasons that are completely avoidable. The problem is not a lack of effort or intelligence. It is usually a breakdown in discipline. When testing is rushed or treated casually, the results can push teams in the wrong direction and lead to costly decisions.

One of the most common mistakes is changing too many things at once. A title update, a content rewrite, and a layout adjustment might all seem harmless on their own, but when they happen together, there is no way to know which change influenced the outcome.

Another expensive error is ending tests too early. Rankings fluctuate, traffic ebbs and flows, and search engines need time to crawl, process, and evaluate changes. When teams check results after a few days and call a winner, they are often reacting to noise rather than signal. You need patience to reach a conclusion.

Documentation is also where many teams quietly lose money. Tests get run, insights are learned, and then everything disappears when someone leaves or shifts roles. Without a clear record of what was tested, why it was tested, and what happened, teams repeat the same experiments and mistakes. A simple test log creates continuity and turns individual experiments into long-term knowledge.

The final mistake is overconfidence. Even well-run SEO tests do not produce absolute truths. They reveal tendencies, not guarantees. Treating test results as universal rules can lead to over-optimization and missed context.

If you are wondering where to start, here are grounded ideas that actually work.

This question comes up sooner or later for anyone responsible for growth. SEO testing takes planning, patience, and discipline, and it is fair to wonder whether the return justifies the effort. If SEO is not a serious channel for your business, or if content is published casually without clear goals, testing may feel like unnecessary overhead.

But when organic search drives leads, sales, or long-term demand, testing becomes one of the most valuable investments you can make. It helps you avoid scaling weak ideas, protect strong ones, and make changes with confidence instead of hope. SEO testing does not promise instant results or dramatic shortcuts. What it offers is clarity.

And in a channel as slow and competitive as SEO, clarity compounds.

SEO changes are easy to make. Good decisions are harder. Testing bridges that gap. The real question is not whether SEO testing works. It is whether you are comfortable letting untested assumptions guide your growth.

SEO testing is the process of making targeted changes to a website such as content, meta tags, headings, or backlinks and tracking how they affect organic search performance, like rankings, traffic, and visibility in search results.

While A/B testing focuses on user conversions and experience, SEO testing targets search engine behavior, using control groups to isolate changes' effects on metrics like impressions and clicks.

SEO testing tests hypotheses with real metrics, proving ROI, optimizing elements like page speed or content length, and boosting overall traffic and rankings.

Typically 2-4 weeks for meta changes, 4-6 weeks for content or links, to gather enough data while accounting for Google's crawl cycles.

Start using our A/B test platform now and unlock the hidden potential of your website traffic. Your success begins with giving users the personalized experiences they want.

Start Your Free Trial

Empowering businesses to optimize their conversion funnels with AI-driven insights and automation. Turn traffic into sales with our advanced attribution platform.