Free Funnel Audit

Convert more customers today!

SEO

10 mins read

SEO

10 mins read

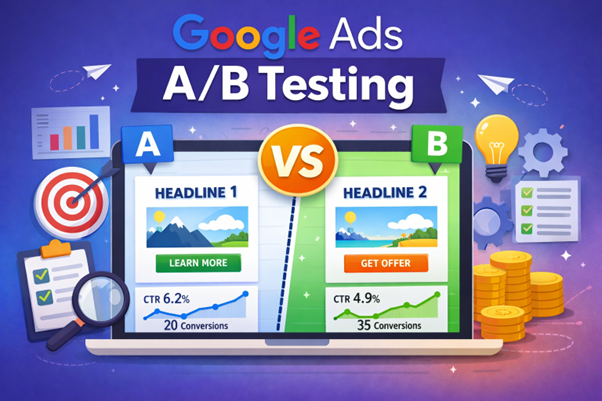

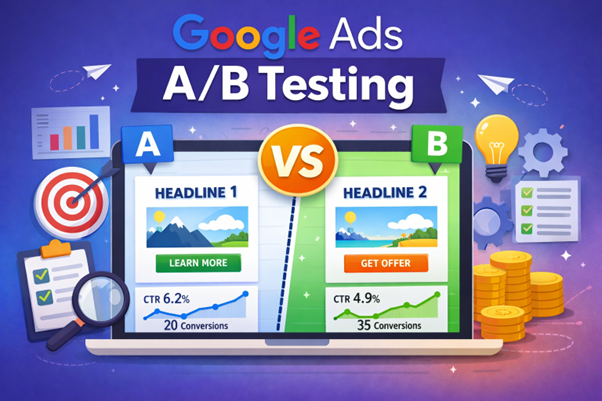

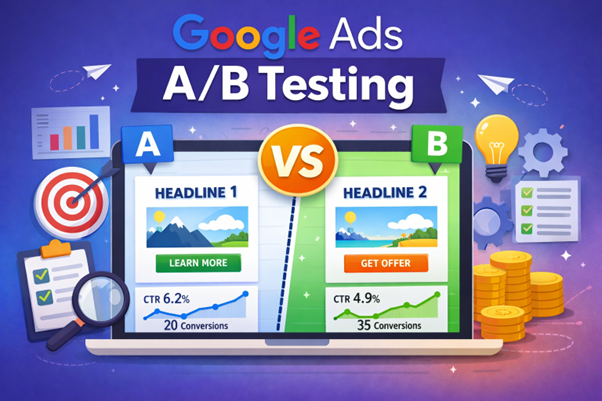

Want to know which ads actually work and which are wasting your budget? A/B testing gives you clear answers instead of guesswork. Google Ads A/B testing is the process of comparing two controlled versions of ads, campaigns, or experiences to find what truly drives better results. This guide explains:

Google Ads A/B testing works when one change is tested at a time under stable conditions and measured against your marketing goal.. Most poor results come from testing too much, too early, or without enough data.

That single truth answers the biggest doubt right away.

Many advertisers believe A/B testing always improves performance. That belief causes wasted spend, false winners, and confused accounts. Testing is powerful, but only when the situation is right and the method is clean.

This playbook walks through those moments calmly. The aim is not to test more, but to test smarter.

A common scene plays out again and again. A campaign struggles. New headlines get added. Budgets shift. Bids change. Then, the results are checked after a few days.

Nothing improves. Or worse, performance drops.

This is not bad luck. It is bad testing.

Google Ads A/B testing fails most often because the account is not ready for it. Automation needs stability. Algorithms need time. Testing needs isolation. When all three collide, noise replaces insight.

The pages ranking today explain how to run tests. Very few explain when not to.

That gap matters.

Google Ads A/B testing is no longer just about swapping headlines or descriptions. In 2026, testing lives inside a system driven by automation, machine learning, and predictive bidding.

At its core, Google Ads A/B testing means comparing two controlled setups while keeping everything else the same. The goal is to isolate cause and effect.

What has changed is the surface area.

Testing can now happen across:

The principle stays simple. The execution does not.

Automation decides more than ever. That makes clean testing harder, not easier.

These terms often get used as if they mean the same thing. They do not.

A/B testing is the concept. Google Ads Experiments is a tool.

A/B testing is a method of learning. Experiments are one way to apply it inside Google Ads.

This distinction matters because not every test belongs inside Experiments. Some tests break when automation interferes. Others need manual control.

Understanding this difference prevents false confidence.

Why A/B test your Google Ads when automation already optimizes bids and placements?

Because automation optimizes for what it sees, not what it understands.

Algorithms react to data patterns. They do not understand intent shifts, emotional triggers, or message clarity. Humans still decide what message enters the system.

Testing reveals which inputs deserve more spend.

It answers questions that matter in the real world:

Without testing, decisions rely on assumptions. With testing, decisions rest on evidence.

Testing works best when the environment is calm.

That sounds simple. It is often ignored.

Google Ads A/B testing makes sense when:

High-volume accounts benefit the most. More data shortens test time. Noise fades faster.

This is where Google Ads A/B testing results become meaningful instead of misleading.

This part saves money.

Testing should pause when:

Testing during instability produces random outcomes. One version “wins” simply because it got luckier traffic.

The temptation to test early is strong. Resisting it is smarter.

Early testing feels productive. It looks proactive. In reality, it often slows growth. Testing too soon resets learning. It fragments data. It confuses automation. The account never settles.

This is why many advertisers say Google Ads A/B testing does not work. The issue is timing, not the method.

Waiting feels uncomfortable. But patience often outperforms action.

Before starting any test, ask one question:

“What decision will this test help make?”

If no clear decision exists, the test should not exist either.

Good tests answer clear questions:

Bad tests exist out of habit.

This thinking alone filters out half the waste seen in most accounts.

Google Ads multivariate testing sounds advanced. In practice, it often muddies results.

Testing multiple variables at once makes it impossible to know what caused the change. Automation already mixes signals. Adding more variables multiplies confusion.

Multivariate testing requires a huge volume and strict control. Most accounts do not meet that bar.

Single-variable A/B testing remains the most reliable approach in Google Ads.

Focusing on one change at a time works far better than trying to outsmart the system with complicated tests.

Website A/B testing and Google Ads A/B testing should talk to each other.

They often do not.

Ads promise something. Pages deliver something else. Testing both in isolation creates blind spots.

Website A/B testing helps answer questions ads cannot:

When combined thoughtfully, ad tests and page tests reinforce each other instead of fighting for credit.

Is this worth the money?

Testing costs time, budget, and patience. Not every account can afford that.

For small budgets, fewer tests done well beat many tests done poorly. Sometimes, improving fundamentals outperforms experimentation.

Testing is not a badge of maturity. It is a tool. Tools should be picked only when needed.

That mindset separates stable accounts from chaotic ones.

Google Ads A/B testing results rarely deliver dramatic overnight wins.

Small gains compound. A few percentage points matter. Over time, they add up.

Expecting massive jumps sets the wrong benchmark. The goal is clarity, not miracles.

Understanding this keeps decisions grounded and prevents chasing noise.

Google Ads A/B testing works best when it follows a fixed framework, not instincts or habits. Without structure, tests blur together, and results lose meaning. With structure, even small accounts can learn something useful.

This framework exists to answer one question clearly.

“What should change next?”

Every step below protects data quality, budget, and decision clarity.

The fastest way to break a test is to change more than one thing at once.

This happens often. A new headline goes live. At the same time, bids change. Budgets increase. A new audience signal gets added. Then results shift, and nobody knows why.

A clean Google Ads A/B testing example always tests one variable.

Only one.

That variable can be:

But never more than one at a time.

If two changes go live together, the test is already invalid.

Single-variable testing feels slow. But it produces usable insight.

When only one thing changes, results can be trusted. When many things change, results only create opinions.

Google Ads already mixes signals behind the scenes. Testing needs to reduce noise, not add more.

This is why Google Ads multivariate testing fails for most accounts. It assumes control where none exists.

Simple tests scale. Complex tests confuse.

No.

Not every element deserves testing.

Some things must be fixed first. Poor tracking. Weak landing pages. Confusing offers. Testing on top of broken basics only measures failure faster.

A useful filter helps here.

Before testing, ask:

If the answer is unclear, testing can wait.

Google Ads A/B testing fails quietly when success is unclear.

One person checks the click-through rate. Another checks the cost per conversion. A third looks at impressions. Everyone reaches a different conclusion.

That confusion starts before the test even launches.

Every test needs one primary metric.

Only one.

The metric depends on what is being tested.

For ad copy tests:

For landing page tests:

For bidding strategy tests:

Google Ads A/B testing results become reliable only when success is defined upfront.

Click-through rate is easy to see. It feels intuitive. It is also misleading.

A headline that attracts curiosity can raise CTR while lowering conversion quality. More clicks do not always mean better traffic.

Many tests “win” on CTR and quietly lose on profit.

That is why CTR should support decisions, not lead them.

Testing without limits invites chaos.

Guardrails protect budgets and patience.

Before launching any Google Ads A/B test, three boundaries must be set:

Skipping these decisions leads to emotional reactions later.

A 50/50 split feels fair. It is not always smart.

For risky tests, smaller splits protect performance. For confident tests, larger splits speed learning.

Common approaches include:

The key is intention. Every split should exist for a reason.

Budgets should remain stable during tests.

Increasing budget mid-test changes traffic quality. Decreasing budget limits learning. Both distort results.

If the budget must change, the test should pause.

Stable inputs create reliable outputs.

Different types of tests need different tools.

Google Ads offers multiple testing paths. Choosing the wrong one weakens results before launch.

Three main options exist:

Each has strengths and limits.

Google Ads Experiments work best for:

Experiments split traffic at the campaign level. They work well when automation needs isolation.

They do not work well for rapid creative testing.

Understanding this avoids frustration.

Ad Variations suit:

They apply changes quickly across many ads. This speeds testing but reduces control.

Results must be interpreted carefully, especially with responsive search ads.

Ad Variations are efficient, not precise.

Manual testing feels old-fashioned. It remains powerful.

Manual tests help when:

Manual tests require discipline. Naming conventions, timing, and isolation matter.

Done well, they provide clarity when native tools fall short.

Ending tests early is tempting.

Early data often looks exciting. One version jumps ahead. Confidence rises. Changes go live.

Then results fade.

This happens because early data lies.

Time matters. Volume matters more.

Tests should run until:

There is no universal number. Context decides.

Rushing tests creates false winners. Patience reveals patterns.

Early leads feel convincing. They rarely last.

Google Ads traffic varies by time, device, audience, and intent. Short tests capture only fragments.

Waiting feels uncomfortable. It is often the difference between insight and illusion.

Analysis should feel boring. That is a good sign.

Strong results speak quietly. Weak results shout early.

When reviewing Google Ads A/B testing results, focus on:

Ignore vanity metrics. Resist storytelling.

A test wins when:

Small gains still matter. Clarity matters more.

If results are mixed, the test did its job. It showed uncertainty.

Uncertainty is useful.

Applying test results requires care.

Abrupt changes can reset learning. Gradual rollouts protect momentum.

Best practices include:

Scaling is not a switch. It is a process.

The test ends. Results look slightly better. Doubt creeps in.

“Is this improvement real?”

“Is this worth the risk?”

“What if performance drops?”

These questions are normal.

Good testing does not eliminate doubt. It reduces it enough to move forward.

That is success.

Google Ads Experiments are the safest and most reliable way to run Google Ads A/B testing today.

They allow controlled tests inside the same campaign, with shared budgets, traffic splits, and clean data. No guesswork. No duplicate campaigns fighting each other.

Many advertisers think they are testing when they are not. Experiments fix that.

This section explains what Google Ads Experiments actually do, when to use them, how to set them up correctly, and what real testing looks like in the wild.

Google Ads Experiments are a built-in testing feature inside Google Ads. They split traffic between a control version and a test version of the same campaign.

Both versions:

Only one variable is changed. Everything else stays the same.

That single detail is what makes experiments powerful.

Without experiments, most “tests” are polluted by:

Experiments reduce those problems.

Google Ads has changed a lot.

Automation is everywhere. A broad match is pushed harder. AI assets rotate constantly. Performance Max absorbs traffic across networks.

Because of this, manual comparison is no longer reliable. Experiments give control in an automated world.

They help answer real doubts like:

Without experiments, those answers stay fuzzy.

Experiments work best when traffic is steady and goals are clear.

Testing bad data only gives bad answers.

Google Ads allows several experiment types. Each serves a different purpose.

This is the most common Google Ads A/B testing example.

Test ideas include:

Example

A lead generation campaign tests:

Both ads run under the same campaign. Only messaging changes.

These tests answer a big fear:

“Is Google’s automation actually better here?”

Common tests:

Real thinking moment

Will automated bidding reduce lead quality?

Or will it find better users over time?

Experiments reveal that truth.

Broad match is controversial.

But it works well in some accounts.

Experiments can test:

Same keywords. Same budget. Same bids.

Only match type changes.

This is one of the cleanest ways to test broad match safely.

Google Ads Experiments do not change website content directly.

But they can route traffic to different URLs.

This bridges Google Ads A/B testing and website A/B testing.

Example

Conversion tracking remains the same.

Now landing page performance becomes measurable.

Performance Max is hard to evaluate because it blends everything.

Experiments help by:

This is advanced testing. But the potential ROI is worth it.

This is where most people mess up.

Slow down here.

Pick a campaign that:

Avoid campaigns recently edited.

Ask one clear question.

Examples:

If two changes feel tempting, pause.

Split tests fail when changes stack.

Inside Google Ads:

Everything else stays untouched.

Recommended split:

For risky changes:

This reduces shock to performance.

This is where patience matters.

Minimum test duration:

Ending tests early creates false winners.

Looking at results is not just about green arrows.

CTR alone is not success.

High CTR with low conversions is a trap.

Low CPA with low volume may stall growth.

Ask:

Good tests answer business questions, not vanity metrics.

A service business tests:

Result:

Decision:

A B2B advertiser hesitates on broad matches.

Experiment:

Result:

Decision:

Two landing pages tested via ad URLs:

Result:

Decision:

This is where website A/B testing and Google Ads testing connect.

Even smart marketers trip here.

Early wins vanish after learning stabilizes.

Seasonal spikes distort results.

Cheap leads are not always good leads.

Even small edits reset learning.

They ask quiet questions:

They do not chase quick wins.

Testing is not about being clever.

It is about being honest.

Google Ads A/B testing answers simple questions. Multivariate testing tries to answer many at once.

That difference sounds small, but it changes everything.

Google Ads multivariate testing means testing multiple variables at the same time to see how combinations perform.

Instead of testing:

It tests:

This creates multiple combinations inside one experiment.

On paper, this sounds powerful.

In real life, it is risky.

The biggest problem is data dilution.

Every extra variable splits traffic further.

A campaign with:

Most Google Ads accounts do not have enough conversions to support this.

Multivariate testing needs scale.

Most advertisers do not have it.

Multivariate testing can work in a few cases.

This is common in:

For everyone else, simple A/B tests win.

Instead of testing everything at once, smart advertisers test in layers.

This builds confidence step by step.

It feels slower.

It produces cleaner results.

These two often get mixed up.

They are not the same.

Both matter.

But timing matters more.

Website A/B testing should lead when:

Testing ads without fixing a broken page is pointless.

Google Ads testing should lead when:

Fix the front door before rearranging furniture.

Smart advertisers connect both.

Example

Now intent and experience align.

This is where conversion growth accelerates.

Results lie when rushed.

Green arrows do not equal truth.

Google shows confidence levels.

But context matters more.

Ask:

Winning statistically does not always mean winning practically.

False winners happen when:

A test that wins in 7 days may lose in 30.

Patience protects budgets.

Testing during:

creates biased results.

Always ask:

Would this result hold next month?

If not, the test did not answer the real question.

Professionals focus on trends, not moments.

Short-term excitement is ignored.

Think of Google Ads testing like a driving test.

Rules matter.

Judgment matters more.

If a test does not change behavior, it is not worth running.

These moments decide success.

If scaling doubles spend but halves quality, the answer is no.

Yes, if conditions change. No, if intent stays the same.

Tests reveal truth faster than opinions.

Over time, patterns appear.

You learn:

Testing becomes calm.

Decisions feel easier.

They chase hacks.

They promise instant wins.

They forget that Google Ads testing is about learning, not tricks.

Learning compounds.

Shortcuts expire.

Google Ads A/B testing in 2026 is no longer about finding clever tweaks.

It is about surviving automation without losing control.

AI now writes ads.

AI chooses bids.

AI decides placements.

Testing is the only way to see what is actually helping and what is quietly hurting.

Automation is not optional anymore.

Broad match is default.

Responsive ads rotate endlessly.

Performance Max blends networks.

This creates a new problem.

Many changes happen without consent.

Testing becomes a safety net.

Google strongly encourages:

These often increase impressions and CTR.

But higher engagement does not always mean better outcomes.

Instead of mixing everything, isolate.

Experiment setup

Lock:

Only asset source changes.

AI assets:

Human assets:

Testing reveals which matters more for the goal.

Performance Max is often treated as untouchable.

That is a mistake.

Not true.

PMax often:

Testing exposes this.

You can.

Use experiments to compare:

Run them side by side.

Let data speak.

Broad match scares advertisers.

Rightfully so.

But avoiding it completely can stall growth.

Step-by-step:

This protects the account.

Yes, if done blindly.

No, if tested properly.

Experiments cap risk.

This is an underrated decision.

Testing forever creates paralysis.

Scaling too early creates chaos.

At this point, confidence exists.

Never scale:

Scaling amplifies mistakes faster than success.

Scaling is not just a budget increase.

It includes:

Each step is measured.

Think like someone being evaluated.

Ask:

Good testers think ahead.

Testing can feel uncomfortable.

A test might lose.

Ego gets bruised.

Budgets feel exposed.

That is normal.

Professionals test anyway.

They stop testing after a few wins.

They rely on automation blindly.

They react instead of plan.

Testing is not a phase.

It is a system.

Over months, clarity forms.

You know:

This reduces stress.

Results feel earned.

Google Ads A/B testing works best when it stops being an activity and becomes a habit.

One-off tests bring temporary wins.

A system builds long-term advantage.

The issue is not a lack of knowledge.

It is a lack of structure.

Most advertisers:

Without a system, learning leaks out.

A testing system answers one question clearly:

“What should be tested next, and why?”

If that answer is fuzzy, testing becomes noise.

Every test should begin with a simple sentence.

Examples:

If the reason cannot be stated clearly, pause.

Good tests are boring but useful.

This is non-negotiable.

A simple document works.

This prevents repeating failed ideas.

Memory fades. Logs do not.

Not all tests are equal.

High-value tests answer big questions.

Low-impact tests:

Start with decisions that change direction.

Testing chaos kills consistency.

Plan ahead.

Example:

This keeps focus sharp.

This mindset changes everything.

A test that fails still:

Failure is information.

No test is wasted if it answers a question honestly.

Avoid retesting out of habit.

Re-test when:

Do not re-test the same idea, hoping for luck.

Patterns emerge.

You start noticing:

Decisions get faster.

Confidence replaces anxiety.

Website testing should follow ad testing.

Why?

Ads define intent.

Pages fulfill intent.

Once ads are stable:

This sharpens conversion efficiency.

Chasing perfection.

Google Ads will never be “done.”

Markets change. Algorithms evolve. User behavior shifts.

Testing is ongoing. That is not a flaw. It is the edge.

If every decision in Google Ads had to be backed by a clean test result, how different would a strategy look?

That question alone changes how campaigns are built, scaled, and trusted.

Content pruning is the process of reviewing existing website content and updating, consolidating, or removing pages that are outdated, low quality, or underperforming to improve overall site health and SEO.

Pruning reduces index bloat. Index bloat happens when a website has too many low-value or unnecessary pages indexed by search engines. This makes it harder for search engines to properly crawl, understand, and rank the pages that actually matter. Content pruning helps search engines crawl and understand your site more efficiently, and allows your best pages to rank better by removing weak or duplicate content that dilutes authority.

A content audit collects data and evaluates your pages, while content pruning is the action phase where you decide whether to keep, improve, merge, or delete specific content based on that audit.

Most websites benefit from a light pruning review every quarter and a deeper, sitewide pruning project at least once a year, depending on how frequently new content is published.

Content pruning usually works best as a collaboration between SEO specialists, content strategists, and stakeholders from product or marketing, with one owner responsible for final decisions and documentation.

Start using our A/B test platform now and unlock the hidden potential of your website traffic. Your success begins with giving users the personalized experiences they want.

Start Your Free Trial

Empowering businesses to optimize their conversion funnels with AI-driven insights and automation. Turn traffic into sales with our advanced attribution platform.